No images? Click here

Monthly AI Newsletter from PortNLP Lab

Issue #1 | October 2024

Hi and welcome to the very first issue of explain! Each month, we’re sharing fresh insights into the world of responsible artificial intelligence (AI) – because it’s not just about what AI can do, but also about how it does what it does and how we can use it responsibly.

Based at Portland State University’s Computer Science department, our work focuses on large language models (LLMs). We’re thrilled to have you with us on this journey—let's dive in!

In the Know

Earlier this month, Geoffrey Hinton and John Hopfield were awarded the 2024 Nobel Prize in Physics for their foundational work in neural networks, which helped shape modern AI. Their early research in the 1980s laid the groundwork for today’s models like ChatGPT, drawing from physics concepts such as statistical mechanics. This recognition emphasizes the deep interconnection between physics and machine learning. READ MORE

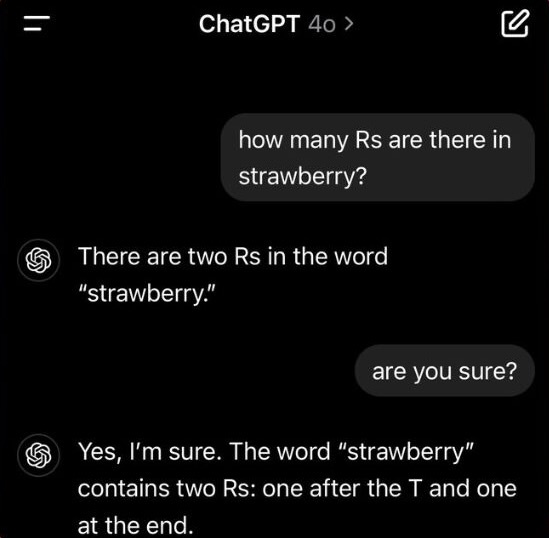

Did you know that some AI models can’t correctly count the number of ‘r’s in a word like ‘strawberry’? The issue comes down to two factors. First, LLMs rely on tokenization, breaking words into smaller pieces, which can obscure individual letters. Second, they generate outputs

by predicting the most likely sequence of tokens instead of truly understanding or tracking information like letter counts.

READ MORE

Get Involved

RESPONSIBLE AI TALK | 11/21 @ 12:00PM

Are you interested in learning more about responsible AI? Come join us for a presentation by Sara Tangdall, Responsible AI Product Director at Salesforce. This event will explore the importance of ethical AI development and practical approaches to making AI accountable.

Topic: Responsible AI

Location: FAB-10

Date: Thursday, November 21

Time: 12:00 PM - 1:00 PM PST

YOUNG AI LEADERS COMMUNITY

For passionate individuals aged 18-30, here's an opportunity to help ensure that AI benefits everyone. Click here to learn more. The deadline to apply is December 1, 2024.

Spotlight on Us

We are excited to share that we will present the following three papers this month:

LLMs typically mix what you provide (contextual knowledge) with its own embedded (parametric knowledge) when responding to open-ended questions in knowledge-consistent scenarios. “When Context Leads but Parametric Memory Follows in Large Language Models”, Tao et al., at the EMNLP 2024 in Miami, Florida.

Many LLMs have a super-long context window, but how well can they use them? “Multilingual Evaluation of Long Context Retrieval and Reasoning”, Agrawal et al., at the Multilingual Representation Learning Workshop at EMNLP 2024 [Honorable Mention].

Our research shows that unreliable news stories predominantly consist of subjective statements, in contrast to reliable ones. “Improving Explainable Fact-Checking via Sentence-Level Factual Reasoning”, Vargas et al., at the Fact Extraction and VERification Workshop at EMNLP 2024.

And we’ve got exciting news: Ph.D. student Amber Shore has been selected for the 2024-2025 CLS Refresh program, funded by the U.S. Department of State! She’ll be receiving personalized Ukrainian tutoring this spring. Congratulations, Amber!

Chief Editor of the Month: Yufei Tao

Contributors: Ameeta Agrawal, Ellyn Ayton, Sina Bagheri Nezhad, Rhitabrat Pokharel, Russell Scheinberg, Amber Shore

Follow us on